Introduction

The Vollo SDK is designed for low latency streaming inference of machine learning (ML) models on FPGA platforms.

Evaluation

You can easily discover the latency Vollo can achieve for your models, without needing an FPGA or a Vollo license. Use the online Vollo Sandbox here.

Alternatively, you can download and install the Vollo SDK on your own computer if you wish to evaluate Vollo offline. You won't need an FPGA or a Vollo license for this either. See Getting Started for details.

User Guide Overview

This document outlines the following:

- Installation

- Key features of Vollo

- Steps to get started with Vollo

- The Vollo Compiler API

- The Vollo Runtime API

- Hardware requirements and setup

Installation

The latest SDK is available for download from https://github.com/MyrtleSoftware/vollo-sdk/releases.

Download the vollo-sdk-<version>.run self-extractable archive and execute it

to extract the Vollo SDK contents to the current directory.

chmod +x vollo-sdk-<version>.run

./vollo-sdk-<version>.run

Key Features

Vollo accelerates machine learning inference for low latency streaming models typically found in financial trading or fraud detection systems such as:

- Market predictions

- Risk analysis

- Anomaly detection

- Portfolio optimisation

Vollo is able to process of range of models, including models which maintain state while streaming such as convolutional models.

Key characteristics of Vollo are:

- Low latency inference of machine learning models, typically between 5-10μs.

- High accuracy inference through use of Brain Floating Point 16 (bfloat16) numerical format.

- High density processing in a 1U server form factor suitable for co-located server deployment.

- Compiles a range of PyTorch models for use on the accelerator.

Getting Started

You can get started with evaluating your ML model's performance on Vollo using the Vollo compiler and Vollo virtual machine (VM), which don't require an FPGA accelerator.

When you are ready, you can run inferences with your model on a Vollo FPGA accelerator using an evaluation license.

Performance estimation and model design with the Vollo compiler

You can use the Vollo compiler and VM to compile and estimate the performance of your model in an ML user's environment without any accelerator.

The Vollo compiler and Vollo VM execution time is typically on the order of seconds, enabling fast model iteration for tuning models to meet a latency target.

To estimate performance of your model with the Vollo SDK:

-

Download and extract the Vollo SDK.

-

Install the Vollo compiler Python libraries.

-

Compile your model using the Vollo compiler and run the compiled model in the Vollo VM to generate a compute latency estimate that will be achieved with Vollo.

See Vollo compiler Example 1 for a fully worked example of this including performance estimation.

-

Add in IO latency for your model characteristics in order to estimate end to end latency.

-

Iterate on your model architecture to meet your combined latency and accuracy requirements.

Validating inference performance using the Vollo FPGA accelerator

When you are ready to run inferences with your models on a Vollo accelerator, you will need a compatible FPGA based PCIe accelerator card and a Vollo license.

Evaluation licenses can be provided free of charge by contacting vollo@myrtle.ai.

To validate inference performance on Vollo:

-

Follow the steps to program the Intel Agilex or AMD V80 and license the FPGA.

-

Compile your model and save it as a

.volloprogram file using the Vollo compiler.See Vollo compiler Example 1 for a fully worked example.

-

Run and benchmark your model on the accelerator using the Vollo runtime C example.

Make sure to pass the example application the path to your saved

.volloprogram when you invoke it on the command line.

Note that the Vollo SDK includes prebuilt FPGA bitstreams for selected PCIe accelerator cards so no FPGA compilation or configuration is required after initial accelerator setup. As a result loading user models to run on Vollo takes under a second, enabling fast onboard iteration and evaluation of different models.

Vollo Compiler

The Vollo compiler is made up of 2 Python libraries:

- The

vollo-torchPyTorch frontend to the compiler. - The

vollo-compilerbackend that can transform and compile a model to a Vollo program (.vollofile).

The Vollo Runtime section describes how to run a Vollo program on a Vollo accelerator. The Vollo compiler API also includes functionality to simulate and estimate performance of Vollo programs.

Installation

Set up Vollo environment variables by sourcing

setup.sh in bash.

Install the wheel files for the Vollo compiler libraries. It's recommended that you install these into a virtual environment.

Note: the packaged wheels only support python 3.7 or greater

python3 -m venv vollo-venv

source vollo-venv/bin/activate

pip install --upgrade pip

pip install "$VOLLO_SDK"/python/*.whl

API Reference

This chapter walks through examples of how to use the Vollo compiler that should cover the most commonly used parts of the API.

A more complete API reference can be found here.

Supported Models

The Vollo compiler supports PyTorch models that use the following operations:

| Operation | Support Notes | Fp32 Support |

|---|---|---|

| Pointwise arithmetic ops | +, -, *, /, maximum, minimum, the pointwise overload of max and min | ✅ +, -, *, maximum, minimum |

| Inequality | >, <, >=, <= | ✅ |

| Clamp ops | clamp, relu | ✅ |

| Matrix multiplication | Linear; matmul / @ where one side is a constant | ❌ |

| Convolution | Via vollo_torch.nn.PaddedConv1d, with groups == 1 or groups == in_channels == out_channels | ❌ |

| LSTM | torch.nn.LSTM, vollo_torch.nn.LSTM | ❌ |

| Indexing / slicing | Partial square bracket [] support; index_select, narrow | ✅ |

sum | keepdim = True required when summing over data dim | ❌ |

where | If the where condition is an inequality comparison | ✅ |

| Concatenation | cat, concat, concatenate | ✅ |

| Stacking | stack, vstack, row_stack, hstack, column_stack, dstack | ✅ |

LayerNorm | ❌ | |

RMSNorm | via vollo_torch.nn.RMSNorm for torch versions < 2.4 | ❌ |

| Batch Normalization | BatchNorm1d, BatchNorm2d, BatchNorm3d | ✅ |

| Transposing | transpose, swapdims, swapaxes, t, T, mT, permute; See section below | ✅ |

squeeze, unsqueeze | ✅ | |

| Reshaping | reshape, view, reshape_as, view_as, flatten; Stride of data dimension must be unchanged | ✅ |

| Broadcasting | Implicitly or with broadcast_to, broadcast_tensors, expand, expand_as | ✅ |

sqrt | torch.sqrt, torch.rsqrt | ❌ |

tanh | torch.tanh, torch.nn.Tanh | ❌ |

| Exponential | torch.exp, torch.exp2 | ❌ |

silu | torch.nn.functional.silu, torch.nn.SiLU | ❌ |

softplus | torch.nn.functional.softplus, torch.nn.Softplus | ❌ |

softmax | torch.softmax, torch.nn.Softmax | ❌ |

sigmoid | torch.sigmoid, torch.nn.functional.sigmoid, torch.nn.Sigmoid | ❌ |

Models that take multiple input tensors and return multiple output tensors (i.e. a tuple of tensors) are supported.

Note that for operations like Dropout and BatchNorm1d (which change behaviour at inference time) to be handled correctly, the model should be in eval mode.

Tensor Memory Format

Vollo supports operations on tensors in data- or channels- last memory format, i.e. the innermost dimension of the tensors should be the data or channels dimension rather than the batch or sequence dimension if there is one. This is because the Vollo accelerator's compute units operate on contiguous vectors (1D tensors) and has limited support for rearranging tensor data, particularly transposing them.

There are some notable exceptions that do not require channels-last tensors:

- Layers that operate on sequences:

Conv1d,LSTM. Vollo supports the same (batch, channels, sequence) memory format that PyTorch uses for these layers, but requires applying the streaming transform to models that contain them. - General matrix multiplication (as opposed to the more restrictive

Linear):matmul,@.

TorchScript

The Vollo compiler supports standard PyTorch modules (torch.nn.Module); it

does not support TorchScript modules (torch.jit.ScriptModule).

Example 1: MLP

Basic models like multilayer perceptrons (MLP) can be defined without any changes from a standard PyTorch definition.

import torch

import torch.nn as nn

import torch.nn.functional as F

class MLP(nn.Module):

def __init__(self, input_size, output_size, hidden_size):

super().__init__()

self.fc1 = nn.Linear(input_size, hidden_size)

self.fc2 = nn.Linear(hidden_size, hidden_size)

self.out = nn.Linear(hidden_size, output_size)

def forward(self, x):

x = F.relu(self.fc1(x))

residual = x

x = F.relu(self.fc2(x)) + residual

return self.out(x)

# Instantiate the model

input_size = 784

output_size = 10

hidden_size = 128

model = MLP(input_size, output_size, hidden_size)

The first stage of compiling a model is to lower it to NNIR. NNIR is the Vollo compiler's intermediate representation for representing neural network graphs. NNIR sits at a similar level of abstraction to ONNX, with most NNIR operators having direct ONNX or PyTorch analogues.

import vollo_torch

# An input to the model needs to be provided so that its execution can be

# traced

input = torch.randn(input_size)

# Trace the model's execution to annotate it with activation shapes

(model, expected_output) = vollo_torch.fx.prepare_shape(model, input)

nnir = vollo_torch.fx.nnir.to_nnir(model)

NNIR can be compiled to a Vollo program given a Vollo accelerator configuration. You can find the preset configurations that can be instantiated in the API reference.

import vollo_compiler

# Replace the Config in the line below with the Config for the accelerator you

# are using

config = vollo_compiler.Config.ia_420f_c6b32()

program = nnir.to_program(config)

Vollo programs have all their memory allocated statically. You can print the static resource usage of a program like this:

print(program.metrics())

Save the program to a file so that it can be used for inference by the Vollo runtime.

program.save('mlp.vollo')

Simulation

The Vollo compiler can be used to simulate programs in the Vollo virtual machine (VM). This is an instruction level simulation of the Vollo accelerator which can be used to:

- Estimate performance of a model. The VM is not cycle accurate but provides an indicative cycle count of a model.

- Verify the correctness of the compilation stages, including the effect of quantisation.

Construct a VM instance with your program loaded. Run the VM by passing it a numpy array of the input. It should produce the same result as the source PyTorch model, within some range of floating point error.

vm = program.to_vm()

vm_output = vm.run(input.detach().numpy())

torch.testing.assert_close(expected_output, torch.from_numpy(vm_output), atol = 1e-2, rtol = 1e-2)

print("cycle count:", program.cycle_count_per_inference())

# Translate the estimated cycle count to a duration for the compute (not

# including IO) in microseconds, using the bitstream clock speed (320 MHz)

print(f"latency (compute): {program.compute_duration_per_inference_us():.1f}us")

The VM records the number of cycles the program took to execute.

Note there will be some discrepancy between the VM's cycle count and the true

cycle count, so the VM's cycle count should be treated as an estimate.

Also note that the VM does not model the latency of the communication between

the host and the Vollo accelerator. This communication latency can be estimated

using our IO Round Trip benchmarks.

Example 2: CNN

Vollo supports streaming 1D convolutional neural networks (CNNs), which might require you to make some changes to your model if you are currently using a non-streaming 1D CNN.

A streaming convolution applies the convolutional kernel to the most recent window of the input sequence as the data points in the input sequence arrive. This differs from a non-streaming convolution, which expects to receive a complete input sequence and applies its convolutional kernel to each window of that input.

Streaming convolutions will have much lower latency than non-streaming

convolutions, but they have to maintain some state, namely the most recent

window of input, making them unnatural to define in ML frameworks like PyTorch.

To enable the use of of streaming convolutions, the Vollo compiler includes a

streaming_transform which transforms a non-streaming CNN into a streaming CNN,

as long as the non-streaming CNN meets certain constraints.

Using the streaming_transform

The model below is a non-streaming CNN taking an input sequence of length 5 and producing an output of length 1. (It can actually take any input sequence of length 5+n and produce an output of length 1+n, but we will only consider the minimal sequence length, since that is the length of the input context used by each of the output elements.)

import torch

import torch.nn as nn

import torch.nn.functional as F

class CNN(nn.Module):

def __init__(self, in_channels, out_channels, hidden_channels, kernel_size=3):

super().__init__()

# Reduces sequence length by (kernel_size - 1) = 2

self.conv1 = nn.Conv1d(in_channels, hidden_channels, kernel_size)

# Reduces sequence length by (kernel_size - 1) = 2

self.conv2 = nn.Conv1d(hidden_channels, out_channels, kernel_size)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.relu(self.conv2(x))

return x

# Instantiate the model

in_channels = 32

out_channels = 1

hidden_channels = 128

model = CNN(in_channels, out_channels, hidden_channels)

In order to apply the streaming_transform, the torch.nn.Conv1d layers need

to be replaced with vollo_torch.nn.PaddedConv1d layers.

import torch

import torch.nn as nn

import torch.nn.functional as F

import vollo_torch.nn

class CNN(nn.Module):

def __init__(self, in_channels, out_channels, hidden_channels, kernel_size=3):

super().__init__()

self.conv1 = vollo_torch.nn.PaddedConv1d(in_channels, hidden_channels, kernel_size)

self.conv2 = vollo_torch.nn.PaddedConv1d(hidden_channels, out_channels, kernel_size)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.relu(self.conv2(x))

return x

# Instantiate the model

in_channels = 32

out_channels = 1

hidden_channels = 128

model = CNN(in_channels, out_channels, hidden_channels)

These PaddedConv1d layers are identical to torch.nn.Conv1d, but with left

padding pre-applied to the input so as not to reduce the sequence length.

This PaddedConv1d model is still a non-streaming model, which now takes an

input sequence of length 5 and produces an output of length 5.

Its relationship to the original Conv1d model is that, given the same model

parameters (weights, biases, etc.) and input sequence, the last element of the

output sequence of the PaddedConv1d model will be equal to the last/only

element of the output sequence of the Conv1d model.

The PaddedConv1d model can be lowered to NNIR and have the

streaming_transform applied.

batch_size = 1

sequence_length = 5

input = torch.randn(batch_size, in_channels, sequence_length)

(model, expected_output) = vollo_torch.fx.prepare_shape(model, input)

nnir = vollo_torch.fx.nnir.to_nnir(model)

# Provide the streaming transform with index of the sequence axis

(nnir, output_axis) = nnir.streaming_transform(2)

The resulting NNIR graph represents a streaming CNN, i.e. containing state, that takes a single data point of a sequence as input and produces a single data point as output, updating its input window state in the process. Input sequences for the streaming CNN need to be fed in sequentially, e.g. in a loop. For example, using the VM:

import vollo_compiler

# Replace the Config in the line below with the Config for the accelerator you

# are using

program = nnir.to_program(vollo_compiler.Config.ia_420f_c6b32())

vm = program.to_vm()

vm_outputs = []

for i in range(5):

# Runs inference on one element of the input sequence, updating the

# streaming CNN's state

vm_outputs.append(vm.run(input[:, :, i].detach().numpy()))

torch.testing.assert_close(

expected_output,

torch.stack(

[torch.from_numpy(output) for output in vm_outputs],

axis=output_axis,

),

atol = 1e-2,

rtol = 1e-2

)

The streaming CNN satisfies the property that, given an input sequence, the i-th element of the output sequence of the non-streaming CNN will be equal to the output of the i-th iteration of feeding the input to the streaming CNN.

The streaming CNN can be saved and run on the accelerator like any other program:

program.save('cnn.vollo')

Example 3: LSTM

Vollo supports both streaming and non-streaming LSTM models.

Non-streaming LSTM model

To compile an LSTM model as a non-streaming model, you can follow the steps outlined in Example 1: MLP of lowering the model to NNIR, and then compiling it with an accelerator config.

import torch

import torch.nn as nn

import vollo_compiler

import vollo_torch

class LstmNet(nn.Module):

def __init__(self, input_size, hidden_size, num_layers, output_size):

super().__init__()

self.lstm = nn.LSTM(input_size, hidden_size, num_layers=num_layers)

self.linear = nn.Linear(hidden_size, output_size)

def forward(self, x):

x, _ = self.lstm(x)

x = self.linear(x)

return x

input_size = 128

hidden_size = 256

num_layers = 2

output_size = 16

model = LstmNet(input_size, hidden_size, num_layers, output_size)

seq_length = 20

input = torch.randn(seq_length, input_size)

# Trace the model's execution to annotate it with activation shapes

(model, expected_output) = vollo_torch.fx.prepare_shape(model, input)

nnir = vollo_torch.fx.nnir.to_nnir(model)

# Replace this config with the one for the accelerator you are using

config = vollo_compiler.Config.ia_840f_c3b64()

program = nnir.to_program(config)

The example above will give a Vollo program which takes an input tensor of size 20 x 128,

and will output a tensor of size 20 x 16. This is a non-streaming LSTM model, since it takes

an entire sequence as input.

As in Example 1: MLP we can construct a VM instance to simulate the Vollo accelerator, allowing us to get bit-accurate results from the compiled model, and a latency estimate.

vm = program.to_vm()

vm_output = vm.run(input.detach().numpy())

torch.testing.assert_close(expected_output, torch.from_numpy(vm_output), atol = 1e-2, rtol = 1e-2)

print(f"latency (compute): {program.compute_duration_per_inference_us():.1f}us")

Streaming LSTM model

If you want the LSTM model to operate on an ongoing stream of data as its input sequence, it is probably

more desirable to use a streaming LSTM model. We can use the same PyTorch model defined above. The only additional

step required is to call the streaming_transform (detailed in Example 2: CNN) on the NNIR:

input_size = 128

hidden_size = 256

num_layers = 2

output_size = 16

model = LstmNet(input_size, hidden_size, num_layers, output_size)

seq_length = 20

input = torch.randn(seq_length, input_size)

# Trace the model's execution to annotate it with activation shapes

(model, expected_output) = vollo_torch.fx.prepare_shape(model, input)

nnir = vollo_torch.fx.nnir.to_nnir(model)

# We provide the streaming transform with the sequence axis to 'stream' over.

(nnir, output_streaming_axis) = nnir.streaming_transform(0)

assert(output_streaming_axis == 0)

Here, the streaming_transform tells the compiler to treat axis 0 as the sequence

dimension, and that we intend to provide the program with a single sequence element

per inference. I.e. on each inference, we pass in a tensor of size 128 and receive a

tensor of size 16 on each inference. The resulting Vollo program is stateful, and will

update the internal hidden state and cell state on each inference.

Note that this streaming model will have a much lower latency on Vollo than the non-streaming model.

The streaming model only needs to run num_layers = 2 LSTM operations per inference, where the non-streaming

model needs to run num_layers * seq_length = 2 * 20 LSTM operations per inference.

As above, we can now compile this streaming NNIR to a program with a chosen accelerator configuration, and test the program with a VM:

# Replace the Config in the line below with the Config for the accelerator you

# are using

program = nnir.to_program(vollo_compiler.Config.ia_840f_c3b64())

vm = program.to_vm()

vm_outputs = []

for i in range(seq_length):

# Note that the VM takes a single sequence element per run

vm_outputs.append(vm.run(input[i, :].detach().numpy()))

torch.testing.assert_close(

expected_output,

torch.stack(

[torch.from_numpy(output) for output in vm_outputs],

axis=output_streaming_axis,

),

atol = 1e-2,

rtol = 1e-2

)

print(f"latency (compute): {program.compute_duration_per_inference_us():.1f}us")

Example 4: Mixed Precision

By default, operations on Vollo run in bfloat16 (BF16) format1, but there is support for some operations to be run in float32 (FP32).

See Supported Models for a list of which operations have FP32 support.

To run operations in FP32, the operations should be placed in a vollo_torch.Fp32Activations context. The following example

shows an MLP with some pre-processing on its inputs in FP32:

import torch

import torch.nn as nn

import torch.nn.functional as F

import vollo_torch

class PreprocessMLP(nn.Module):

def __init__(self, input_size, output_size, hidden_size):

super().__init__()

self.fc1 = nn.Linear(input_size, hidden_size)

self.fc2 = nn.Linear(hidden_size, hidden_size)

self.out = nn.Linear(hidden_size, output_size)

def forward(self, x, y):

with vollo_torch.Fp32Activations():

z = x + 0.763 * y

z = torch.clamp(z, -2.633, 2.633)

z = F.relu(self.fc1(z))

residual = z

z = F.relu(self.fc2(z)) + residual

return self.out(z)

input_size = 784

output_size = 10

hidden_size = 128

model = PreprocessMLP(input_size, output_size, hidden_size)

The inputs and outputs of a model can also be FP32. The default precision is BF16; if FP32 is required this must be

specified when calling to_nnir:

import vollo_compiler

inputs = [torch.randn(input_size), torch.randn(input_size)]

(model, expected_output) = vollo_torch.fx.prepare_shape(model, *inputs)

nnir = vollo_torch.fx.nnir.to_nnir(

model,

inputs_precisions = [vollo_compiler.NumberFormat.FP32, vollo_compiler.NumberFormat.FP32],

outputs_precisions = [vollo_compiler.NumberFormat.BF16]

)

# Note that the printed NNIR will be annotated with the precisions of each layer

# (See the activation_precision fields of the layers)

print(nnir)

Note that the model's inputs_precisions and outputs_precisions will determine what type of data format is sent/received between the Vollo runtime and the Vollo accelerator. If possible, it is best to make the precisions in the model match the

precisions of data you will be providing to the runtime. If these precisions do not match, the values will be converted in software

by the runtime, which can be slow.

config = vollo_compiler.Config.ia_420f_c6b32()

program = nnir.to_program(config)

This is true of most operations, however intermediate values in layer calculations are sometimes stored in higher precision, e.g. in the accumulation of dot products.

Example 5: Multiple Models in a Vollo Program

Vollo supports putting multiple models on a single accelerator.

Multiple NNIRs can be compiled into a single program:

import torch

import torch.nn as nn

import torch.nn.functional as F

import vollo_torch

class MLP(nn.Module):

def __init__(self, input_size, output_size, hidden_size):

super().__init__()

self.fc1 = nn.Linear(input_size, hidden_size)

self.fc2 = nn.Linear(hidden_size, hidden_size)

self.out = nn.Linear(hidden_size, output_size)

def forward(self, x):

x = F.relu(self.fc1(x))

residual = x

x = F.relu(self.fc2(x)) + residual

return self.out(x)

# Instantiate an MLP

input_size = 784

output_size = 10

hidden_size = 128

mlp_model = MLP(input_size, output_size, hidden_size)

mlp_input = torch.randn(input_size)

(mlp_model, mlp_expected_output) = vollo_torch.fx.prepare_shape(mlp_model, mlp_input)

mlp_nnir = vollo_torch.fx.nnir.to_nnir(mlp_model)

class CNN(nn.Module):

def __init__(self, in_channels, out_channels, hidden_channels, kernel_size=3):

super().__init__()

self.conv1 = vollo_torch.nn.PaddedConv1d(in_channels, hidden_channels, kernel_size)

self.conv2 = vollo_torch.nn.PaddedConv1d(hidden_channels, out_channels, kernel_size)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.relu(self.conv2(x))

return x

# Instantiate a CNN

in_channels = 32

out_channels = 1

hidden_channels = 128

cnn_model = CNN(in_channels, out_channels, hidden_channels)

batch_size = 1

sequence_length = 5

cnn_input = torch.randn(batch_size, in_channels, sequence_length)

(cnn_model, cnn_expected_output) = vollo_torch.fx.prepare_shape(cnn_model, cnn_input)

cnn_nnir = vollo_torch.fx.nnir.to_nnir(cnn_model)

(cnn_nnir, output_axis) = cnn_nnir.streaming_transform(2)

# Compile the multi-model program

import vollo_compiler

# Replace the Config in the line below with the Config for the accelerator you

# are using

program_builder = vollo_compiler.ProgramBuilder(vollo_compiler.Config.ia_420f_c6b32())

program_builder.add_nnir(mlp_nnir)

program_builder.add_nnir(cnn_nnir)

multi_model_program = program_builder.to_program()

The vollo_compiler.ProgramBuilder allows you to create a multi-model program. Building a multi-model program may give an allocation error if

the models can't fit on the given Config. Generally each individual model will only have a small latency overhead compared to running it as an individual program. This overhead comes from selecting which model to run.

A model_index can be provided when running inferences on the accelerator or on the VM. The models appear in the order in which they were added to the ProgramBuilder. For example on the VM:

vm = multi_model_program.to_vm()

mlp_vm_output = vm.run(mlp_input.detach().numpy(), model_index = 0)

torch.testing.assert_close(mlp_expected_output, torch.from_numpy(mlp_vm_output), atol = 1e-2, rtol = 1e-2)

cnn_vm_outputs = []

for i in range(5):

cnn_vm_outputs.append(vm.run(cnn_input[:, :, i].detach().numpy(), model_index = 1))

torch.testing.assert_close(

cnn_expected_output,

torch.stack(

[torch.from_numpy(output) for output in cnn_vm_outputs],

axis=output_axis,

),

atol = 1e-2,

rtol = 1e-2

)

ONNX Support

Vollo also provides a tool for compiling ML models defined in ONNX.

vollo-onnx is a command line tool which allows the user to specify an input ONNX file and produces a .vollo program as output. The user specifies a path to the input .onnx file:

Arguments:

<INPUT>

Path to the input .onnx file

The user can specify:

-

The output path:

-o, --output <OUTPUT> Output path for the compiled program file [default: program.vollo] -

A name for the model:

--model-name <MODEL_NAME> Name of the model -

The hardware configuration to use based on a JSON file (this JSON file can be generated using the

Configmethodsavein thevollo_compilerpython module):--hw-config <HW_CONFIG_JSON> Path to the hardware config JSON file -

A name for the hardware configuration to use (from a set of preset configs).

--hw-config-preset <PRESET_NAME> Hardware configuration to use, chosen from a set of presets [possible values: ia420f-c6b32, ia840f-c3b64, ia840f-c2b64d, v80-c6b32] -

Which transformations to perform on the model. Currently the only available transformation is the streaming transform Example 2: CNN:

--streaming-transform <STREAMING_AXIS>... Axes on which to perform the streaming transform in the NNIR graph There should be one axis per model input, as separate arguments If unspecified, no streaming transform is performed Example: --streaming-transform 0 1 for a two-input model with input streaming axes 0 and 1 -

The input shapes of the model. This is required if the ONNX model has dynamic input shapes. Vollo requires that the shapes of the inputs be known at compile-time:

--override-input-shapes <SHAPE>... If the model has dynamic input shapes, the user must pass fixed input shapes Separate each dimension with a ',' and pass each model input as a separate argument Example: --override-input-shapes 10,100,250 20,40 for a two-input model with input shapes (10, 100, 250) and (20, 40) -

Whether to elide all compute logic and generate a program with only IO logic. This is useful for determining IO latencies.

--io-only Generate a program with IO only - useful for testing IO latencies -

Whether to disable certain optimizations in the compiler which increase compilation time.

--disable-optimizations Disables some compiler optimizations. This can improve compilation time

Simplifying ONNX Models

vollo-onnx has a limited list of supported ONNX nodes. Often ONNX models can be over-complicated, and contain unnecessary shaping operations. It is recommended that onnx-simplifier be used before calling vollo-onnx on an ONNX model to remove these unnecessary shaping operations which aren't supported by vollo-onnx:

onnx-sim <model.onnx> <model-sim.onnx> --overwrite-input-shape <model-input-shape>

It is also recommended to use the --overwrite-input-shape with onnx-simplifier, as this can enable further simplifications and better constant folding.

Using ONNX from Python

ONNX models can also be imported and translated to NNIR models directly in python using the static NNIR method from_onnx. This also requires that the input shapes be specified if the ONNX model has dynamic input shapes, otherwise it can be None.

onnx_nnir = vollo_compiler.NNIR.from_onnx(onnx_path, input_shapes)

Supported Nodes

Tensors are expected to be in float32 format, unless they are used as indices / axes (in which case they should be int64s).

vollo-onnx supports models with the following nodes:

| Operator | Support Notes |

|---|---|

| Pointwise arithmetic ops | Add, Sub, Mul, Div |

| Inequality | >, <, >=, <= (when followed by a Where) |

Max and Min | |

Neg | |

| Clamp ops | Clip, Relu |

| Matrix multiplication | MatMul / Gemm where one input is a constant |

Conv | 1d with left-padding such that input and output seq dimensions match, groups == 1 or groups == in_channels == out_channels |

LSTM | LSTM without explicit hidden or cell state initialisation |

Gather | With a 0d/1d tensor of indices |

Slice | step size 1 with constant starts, ends and axes. |

ReduceSum, ReduceMean | With constant axes, keepdims = 1 required on data dimension |

Where | If the Where condition is an inequality comparison |

Concat | |

Transpose | See tensor memory format |

Softmax | |

LayerNormalization | With axis = -1. Supported in onnx opset versions >= 17 |

BatchNormalization | Where input scale, bias, mean and var are constants |

Squeeze, Unsqueeze | |

Reciprocal | |

Identity | |

Sqrt | |

Tanh | |

Exp | |

Softplus | |

Sigmoid | |

Expand | |

Reshape | The stride of the data dimension must be unchanged |

Benchmarks

This section provides benchmarks for the Vollo accelerator for a variety of models.

Performance figures are given for the following configurations of the Vollo accelerator:

- a 6 core, block size 32 configuration which is provided for the V80 accelerator card

- a 3 core, block size 64 configuration which is provided for the IA-840F accelerator card

- a 6 core, block size 32 configuration which is provided for the IA-420F accelerator card

If you require a different configuration, please contact us at vollo@myrtle.ai.

All these performance numbers can be measured using the vollo-sdk with the correct accelerator card

by running the provided benchmark script.

We also provide performance figures for a PCIe roundtrip for various input and output sizes.

Multilayer perceptron (MLP)

The model below is a simple multilayer perceptron (MLP) with 3 layers.

class MLP(nn.Module):

def __init__(self, input_size, hidden_size, output_size):

super().__init__()

self.fc1 = nn.Linear(input_size, hidden_size)

self.fc2 = nn.Linear(hidden_size, hidden_size)

self.fc3 = nn.Linear(hidden_size, output_size)

def forward(self, x):

x = nn.functional.relu(self.fc1(x))

x = nn.functional.relu(self.fc2(x))

return self.fc3(x)

mlp = MLP(256.0, 384.0, 128.0)

We demonstrate the model at a variety of batch sizes. The model has 295K parameters.

V80: 6 cores, block size 32

| Model | Batch size | Mean latency (us) | 99th percentile latency (us) |

|---|---|---|---|

| mlp_b1 | 1 | 2.3 | 2.5 |

| mlp_b4 | 4 | 2.8 | 3.1 |

| mlp_b8 | 8 | 3.4 | 4.0 |

IA-840F: 3 cores, block size 64

| Model | Batch size | Mean latency (us) | 99th percentile latency (us) |

|---|---|---|---|

| mlp_b1 | 1 | 2.5 | 2.6 |

| mlp_b4 | 4 | 2.7 | 3.0 |

| mlp_b8 | 8 | 3.2 | 3.5 |

IA-420F: 6 core, block size 32

| Model | Batch size | Mean latency (us) | 99th percentile latency (us) |

|---|---|---|---|

| mlp_b1 | 1 | 2.5 | 2.7 |

| mlp_b4 | 4 | 3.1 | 3.3 |

| mlp_b8 | 8 | 4.1 | 4.4 |

1D Convolutional neural networks (CNN)

We benchmark a simple 1-D convolutional model with a residual connection after every layer.

class ConvBlock(nn.Module):

def __init__(self, channels, kernel_size):

super().__init__()

self.conv = vollo_torch.nn.PaddedConv1d(channels, channels, kernel_size)

def forward(self, inp):

x = self.conv(inp)

return nn.functional.relu(x) + inp

class CNN(nn.Module):

def __init__(self, num_layers, kernel_size, channels):

super().__init__()

assert num_layers >= 1

self.cnn = nn.Sequential(

*[ConvBlock(channels, kernel_size) for i in range(num_layers)],

)

def forward(self, x):

x = self.cnn(x) # N x channels x T

return x

The kernel size for all models is 8. The batch size and sequence length are both set to 1 (i.e., we benchmark a single timestep). Consecutive inferences are run with spacing between them to minimise latency.

V80: 6 cores, block size 32

| Model | Layers | Channels | Parameters | Mean latency (us) | 99th percentile latency (us) |

|---|---|---|---|---|---|

| cnn_tiny | 3 | 128 | 393K | 2.5 | 2.8 |

| cnn_small | 3 | 256 | 1.6M | 2.4 | 2.7 |

| cnn_med | 6 | 256 | 3.1M | 3.3 | 3.4 |

IA-840F: 3 cores, block size 64

| Model | Layers | Channels | Parameters | Mean latency (us) | 99th percentile latency (us) |

|---|---|---|---|---|---|

| cnn_tiny | 3 | 128 | 393K | 2.1 | 2.3 |

| cnn_small | 3 | 256 | 1.6M | 2.5 | 2.7 |

| cnn_med | 6 | 256 | 3.1M | 3.1 | 3.3 |

IA-420F: 6 cores, block size 32

| Model | Layers | Channels | Parameters | Mean latency (us) | 99th percentile latency (us) |

|---|---|---|---|---|---|

| cnn_tiny | 3 | 128 | 393K | 2.2 | 2.3 |

| cnn_small | 3 | 256 | 1.6M | 2.7 | 2.9 |

| cnn_med | 6 | 256 | 3.1M | 3.4 | 3.6 |

Long Short Term Memory (LSTM) networks

We benchmark an LSTM model consisting of a stack of LSTMs followed by a linear layer.

class LSTM(nn.Module):

def __init__(self, num_layers, input_size, hidden_size, output_size):

super().__init__()

assert num_layers >= 1

self.lstm = vollo_torch.nn.LSTM(

input_size, hidden_size, num_layers=num_layers

)

self.fc = nn.Linear(hidden_size, output_size)

def forward(self, x):

x = self.lstm(x)

x = self.fc(x)

return x

For all the benchmarked models, the input size is the same as the hidden size for all models and the output size is set to 32. The layers refers to the number of LSTM layers in the stack. The batch size and sequence length are both set to 1 (i.e., we benchmark a single timestep). Consecutive inferences are run with spacing between them to minimise latency.

We have also had LSTM models benchmarked and audited as part of a STAC-ML submission where we held the lowest latency across all models. Please refer to our STAC-ML submissions for more details:

Note that Vollo's current performance, as shown in the tables below, is significantly improved over the STAC-ML submissions.

V80: 6 core, block size 32

| Model | Layers | Hidden size | Parameters | Mean latency (us) | 99th percentile latency (us) |

|---|---|---|---|---|---|

| lstm_tiny | 2 | 128 | 268K | 2.4 | 2.7 |

| lstm_small | 3 | 256 | 1.6M | 2.8 | 3.0 |

| lstm_med | 3 | 480 | 5.6M | 3.9 | 4.2 |

| lstm_med_deep | 6 | 320 | 4.9M | 4.2 | 4.5 |

| lstm_large | 3 | 960 | 22.2M | 8.1 | 8.4 |

IA-840F: 3 core, block size 64

| Model | Layers | Hidden size | Parameters | Mean latency (us) | 99th percentile latency (us) |

|---|---|---|---|---|---|

| lstm_tiny | 2 | 128 | 268K | 2.2 | 2.3 |

| lstm_small | 3 | 256 | 1.6M | 2.9 | 3.0 |

| lstm_med | 3 | 480 | 5.6M | 3.5 | 3.6 |

| lstm_med_deep | 6 | 320 | 4.9M | 3.8 | 4.0 |

The large model is not supported on the IA-840F accelerator card as it is too large to fit in the accelerator memory.

IA-420F: 6 core, block size 32

| Model | Layers | Hidden size | Parameters | Mean latency (us) | 99th percentile latency (us) |

|---|---|---|---|---|---|

| lstm_tiny | 2 | 128 | 268K | 2.2 | 2.3 |

| lstm_small | 3 | 256 | 1.6M | 3.1 | 3.2 |

The medium and large models are not supported on the IA-420F accelerator card as they are too large to fit in the accelerator memory.

IO Round Trip

The following IO round trip times are sampled by using a program with no compute on the Vollo accelerator from the Vollo runtime.

More specifically this Vollo accelerator program waits for the last input byte to arrive before it sends the first output byte back. This method takes into account some of the overheads (such as copying to the DMA buffer in the Vollo runtime) associated with IO and this test is set up to see how it scales with difference sizes of inputs and output values.

The following tables shows the round trip times in μs on the IA420F

board (similar times were observed on IA840F), each value is a bfloat16 (2 bytes),

using fewer than 32 values gives the same times as 32 values.

To reproduce these values on your own hardware run the provided benchmark

script with environment variable RUN_IO_TEST=1.

User buffers

This includes copying data to/from DMA buffers.

mean:

| out\in | 32 | 64 | 128 | 256 | 512 | 1024 | 2048 | 4096 | 8192 |

|---|---|---|---|---|---|---|---|---|---|

| 32 | 1.8 | 1.8 | 1.9 | 1.9 | 1.9 | 2.1 | 2.7 | 3.4 | 4.6 |

| 64 | 1.8 | 1.9 | 1.9 | 1.9 | 2.0 | 2.2 | 2.8 | 3.3 | 4.7 |

| 128 | 1.9 | 1.9 | 1.9 | 2.0 | 2.0 | 2.2 | 2.7 | 3.3 | 4.7 |

| 256 | 1.9 | 1.9 | 1.9 | 2.0 | 2.0 | 2.2 | 2.7 | 3.4 | 5.0 |

| 512 | 1.9 | 1.9 | 2.0 | 2.0 | 2.1 | 2.2 | 2.8 | 3.4 | 4.8 |

| 1024 | 2.2 | 2.2 | 2.2 | 2.1 | 2.3 | 2.3 | 2.7 | 3.6 | 4.9 |

| 2048 | 2.4 | 2.4 | 2.5 | 2.5 | 2.5 | 2.6 | 2.8 | 3.8 | 5.0 |

| 4096 | 2.9 | 2.9 | 2.9 | 3.0 | 3.0 | 3.3 | 3.6 | 3.6 | 5.2 |

| 8192 | 3.8 | 3.8 | 3.9 | 3.9 | 3.9 | 4.1 | 4.7 | 4.5 | 5.1 |

p99:

| out\in | 32 | 64 | 128 | 256 | 512 | 1024 | 2048 | 4096 | 8192 |

|---|---|---|---|---|---|---|---|---|---|

| 32 | 2.0 | 2.0 | 2.1 | 2.0 | 2.1 | 2.4 | 3.0 | 3.7 | 4.9 |

| 64 | 2.0 | 2.0 | 2.1 | 2.1 | 2.1 | 2.4 | 3.0 | 3.6 | 5.0 |

| 128 | 2.0 | 2.0 | 2.1 | 2.1 | 2.2 | 2.4 | 2.9 | 3.6 | 5.0 |

| 256 | 2.0 | 2.0 | 2.0 | 2.1 | 2.2 | 2.5 | 3.1 | 3.6 | 5.3 |

| 512 | 2.0 | 2.1 | 2.2 | 2.1 | 2.3 | 2.4 | 3.0 | 3.7 | 5.1 |

| 1024 | 2.4 | 2.4 | 2.4 | 2.4 | 2.4 | 2.5 | 3.1 | 3.8 | 5.4 |

| 2048 | 2.6 | 2.6 | 2.7 | 2.7 | 2.6 | 2.8 | 3.1 | 4.2 | 5.4 |

| 4096 | 3.2 | 3.2 | 3.2 | 3.3 | 3.3 | 3.6 | 3.9 | 3.8 | 5.5 |

| 8192 | 4.1 | 4.1 | 4.1 | 4.1 | 4.2 | 4.4 | 5.0 | 4.7 | 5.3 |

Raw DMA buffers

This is using buffers allocated with vollo_rt_get_raw_buffer which lets the runtime skip IO copy.

mean:

| out\in | 32 | 64 | 128 | 256 | 512 | 1024 | 2048 | 4096 | 8192 |

|---|---|---|---|---|---|---|---|---|---|

| 32 | 1.7 | 1.8 | 1.8 | 1.8 | 1.9 | 1.9 | 2.0 | 2.2 | 2.6 |

| 64 | 1.7 | 1.8 | 1.8 | 1.9 | 1.9 | 1.9 | 2.0 | 2.2 | 2.7 |

| 128 | 1.8 | 1.8 | 1.8 | 1.8 | 1.9 | 1.9 | 2.0 | 2.2 | 2.6 |

| 256 | 1.8 | 1.8 | 1.8 | 1.9 | 1.8 | 1.9 | 2.1 | 2.2 | 2.6 |

| 512 | 1.8 | 1.8 | 1.8 | 1.8 | 1.9 | 1.9 | 2.1 | 2.3 | 2.6 |

| 1024 | 1.9 | 1.9 | 1.9 | 1.9 | 1.9 | 2.0 | 2.1 | 2.3 | 2.7 |

| 2048 | 1.9 | 1.9 | 2.0 | 2.0 | 2.0 | 2.1 | 2.2 | 2.4 | 2.8 |

| 4096 | 2.2 | 2.2 | 2.2 | 2.2 | 2.2 | 2.3 | 2.4 | 2.6 | 3.0 |

| 8192 | 2.5 | 2.5 | 2.6 | 2.6 | 2.6 | 2.7 | 2.8 | 3.0 | 3.4 |

p99:

| out\in | 32 | 64 | 128 | 256 | 512 | 1024 | 2048 | 4096 | 8192 |

|---|---|---|---|---|---|---|---|---|---|

| 32 | 1.9 | 1.9 | 1.9 | 1.9 | 2.1 | 2.1 | 2.2 | 2.4 | 2.9 |

| 64 | 1.9 | 1.9 | 2.0 | 1.9 | 2.0 | 2.1 | 2.2 | 2.4 | 2.9 |

| 128 | 1.9 | 1.9 | 1.9 | 1.9 | 2.1 | 2.0 | 2.2 | 2.4 | 2.9 |

| 256 | 1.9 | 2.0 | 2.0 | 1.9 | 2.0 | 2.1 | 2.2 | 2.4 | 2.8 |

| 512 | 1.9 | 2.0 | 2.0 | 2.0 | 2.0 | 2.1 | 2.2 | 2.5 | 2.9 |

| 1024 | 2.1 | 2.0 | 2.0 | 2.1 | 2.1 | 2.1 | 2.3 | 2.6 | 2.9 |

| 2048 | 2.1 | 2.1 | 2.1 | 2.2 | 2.2 | 2.2 | 2.3 | 2.6 | 3.0 |

| 4096 | 2.3 | 2.3 | 2.3 | 2.4 | 2.4 | 2.5 | 2.6 | 2.9 | 3.4 |

| 8192 | 2.8 | 2.7 | 2.7 | 2.7 | 2.8 | 2.8 | 3.0 | 3.2 | 3.6 |

MMIO

This is skipping DMA for the input (raw DMA buffers are used for the output).

It is configured via the VOLLO_MMIO_MAX_SIZE environment variable.

Typically MMIO would only be used for smaller inputs, but this table shows it used for the entire input.

mean:

| out\in | 32 | 64 | 128 | 256 | 512 | 1024 | 2048 | 4096 | 8192 |

|---|---|---|---|---|---|---|---|---|---|

| 32 | 1.0 | 1.3 | 1.5 | 2.0 | 3.1 | 5.4 | 9.6 | 18.5 | 35.9 |

| 64 | 1.1 | 1.2 | 1.5 | 2.0 | 3.1 | 5.3 | 9.7 | 18.4 | 34.8 |

| 128 | 1.1 | 1.2 | 1.5 | 2.0 | 3.2 | 5.2 | 9.5 | 18.4 | 35.8 |

| 256 | 1.1 | 1.2 | 1.5 | 2.0 | 3.1 | 5.2 | 9.7 | 18.4 | 35.9 |

| 512 | 1.1 | 1.2 | 1.5 | 2.1 | 3.1 | 5.3 | 9.7 | 18.4 | 35.8 |

| 1024 | 1.2 | 1.3 | 1.6 | 2.1 | 3.2 | 5.3 | 9.8 | 18.5 | 36.0 |

| 2048 | 1.2 | 1.4 | 1.7 | 2.2 | 3.2 | 5.5 | 9.9 | 18.5 | 35.9 |

| 4096 | 1.5 | 1.6 | 1.9 | 2.4 | 3.6 | 5.7 | 10.1 | 18.8 | 36.1 |

| 8192 | 1.9 | 2.0 | 2.2 | 2.8 | 3.9 | 6.1 | 10.4 | 19.2 | 36.7 |

p99:

| out\in | 32 | 64 | 128 | 256 | 512 | 1024 | 2048 | 4096 | 8192 |

|---|---|---|---|---|---|---|---|---|---|

| 32 | 1.2 | 1.5 | 1.6 | 2.1 | 3.2 | 5.5 | 9.9 | 19.1 | 37.0 |

| 64 | 1.2 | 1.4 | 1.6 | 2.2 | 3.2 | 5.5 | 9.8 | 19.0 | 35.9 |

| 128 | 1.2 | 1.3 | 1.6 | 2.1 | 3.4 | 5.5 | 10.0 | 19.1 | 36.9 |

| 256 | 1.2 | 1.4 | 1.6 | 2.2 | 3.2 | 5.3 | 9.8 | 19.1 | 36.8 |

| 512 | 1.3 | 1.4 | 1.6 | 2.2 | 3.3 | 5.5 | 9.9 | 19.1 | 36.8 |

| 1024 | 1.5 | 1.4 | 1.7 | 2.2 | 3.3 | 5.5 | 9.8 | 19.2 | 37.0 |

| 2048 | 1.3 | 1.4 | 1.8 | 2.4 | 3.4 | 5.5 | 10.1 | 19.2 | 36.9 |

| 4096 | 1.6 | 1.8 | 2.0 | 2.5 | 3.6 | 5.9 | 10.2 | 19.4 | 37.1 |

| 8192 | 2.0 | 2.1 | 2.3 | 2.9 | 4.0 | 6.3 | 10.6 | 19.8 | 37.6 |

Setting up the Vollo accelerator

This section describes how to program your accelerator card with the Vollo Accelerator upon first use and how to reprogram your accelerator card with updated versions of the Vollo Accelerator. It also describes how to obtain a Vollo license which you will need to use the Vollo accelerator.

Environment Variable Setup

The initial setup instructions should be run in the Vollo SDK directory.

cd vollo-sdk-<VERSION>

When using Vollo, you should also have the setup.sh script sourced in bash

to set up environment variables used by Vollo:

source setup.sh

System Requirements

CPU Requirements

The minimum CPU specification for the system is shown below.

- Single Socket 6 core Intel Xeon CPU at 2.0 GHz, equivalent AMD processor or better.

- 8 GB RAM

Accelerator Card Requirements

The SDK runs on a server CPU with PCIe FPGA accelerator cards. It currently supports the following accelerator cards:

| Accelerator Card | FPGA | Max parameter count |

|---|---|---|

| BittWare IA-420f | Intel Agilex AGF014 | 3.1 Million |

| BittWare IA-840f | Intel Agilex AGF027 | 8.4 Million |

| Napatech NT400D11 | Intel Agilex AGF014 | 3.1 Million |

| AMD Alveo V80 | AMD Versal XCV80 | 25.2 Million |

| Silicom fb4CGg3 | AMD Ultrascale+ VU9P | 12.6 Million |

Operating System Requirements

Vollo is compatible with Ubuntu 20.04 and later.

Programming the Agilex FPGA

Download the bitstream for your FPGA

The bitstream is available on the Github Release page alongside the Vollo SDK.

For example to download the bitstream for the Agilex ia840f board with the c2b64d configuration of Vollo:

curl -LO https://github.com/MyrtleSoftware/vollo-sdk/releases/download/v26.0.2/vollo-ia840f-c2b64d-26.0.tar.gz

mkdir -p $VOLLO_SDK/bitstream

tar -xzf vollo-ia840f-c2b64d-26.0.tar.gz -C $VOLLO_SDK/bitstream

The Agilex-based boards that are currently supported are:

-

Bittware IA420F

-

Bittware IA840F

-

Napatech NT400D11 (Link programmable version)

Note on programming the Napatech NT400D11 over JTAG

The NT400D11 card needs additional cabling beyond a USB cable to program the board over JTAG. This cabling is provided as a development kit by Napatech (PGM-DEVKIT-IFPGA-DF52-2XIDC-UART, product number: 802-0116-01-10) and includes:

-

An Intel® FPGA USB-Blaster II cable and,

-

A Napatech Passive FPGA Download Cable converter box

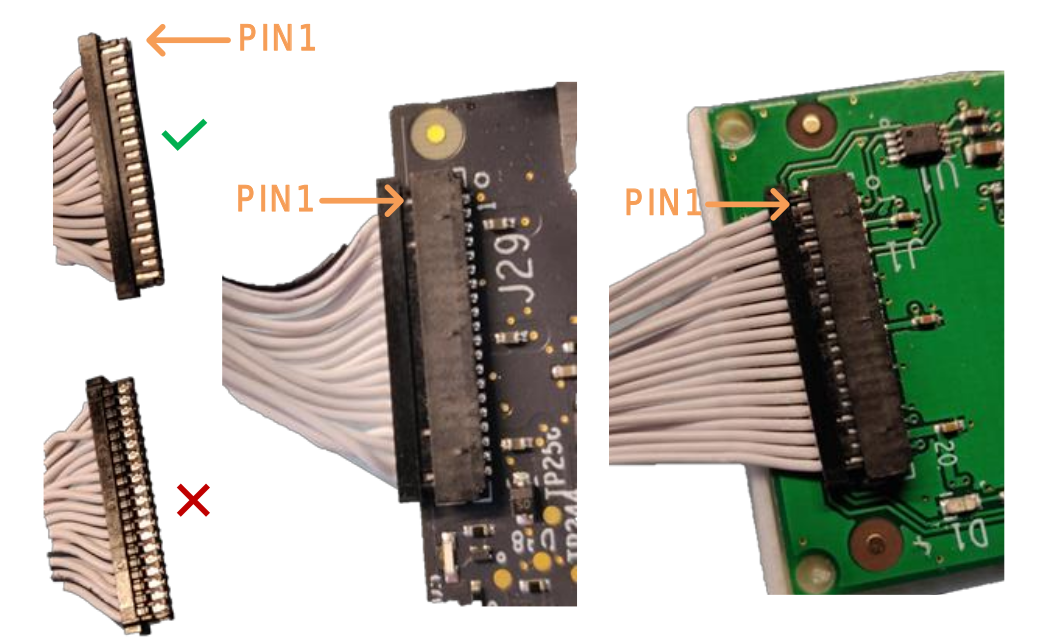

These two cables must be connected to each other as shown in the image below:

To connect this cable array to the Napatech board, the long thin plug should be connected to the connector shown in the following image:

In order to correctly couple these two connectors, observe the conventions shown in the following image:

Connector the USB side of the cable array to a high-speed USB port on your host PC and the follow the rest of the directions in the next section.

Programming the FPGA via JTAG

If your FPGA is not already programmed with the Vollo accelerator then please follow these instructions to load the bitstream into the accelerator card's flash memory.

This requires a USB cable to be connected to the accelerator card and Quartus programmer to be installed on the system so that the device can be programmed over JTAG.

If the FPGA card already has a Vollo Accelerator Bitstream, it can be updated over PCIe by following the steps in the section Program the FPGA via PCIe below. Note that you only need to update the bitstream if updating to an incompatible version of the Vollo SDK. Programming over PCIe is faster than programming over JTAG, and does not require a USB programming cable or for Quartus Programmer to be installed.

-

Download and install the latest Quartus Programmer:

- Navigate to https://www.intel.com/content/www/us/en/software-kit/782411/intel-quartus-prime-pro-edition-design-software-version-23-2-for-linux.html.

- Select

Additional Softwareand scroll down to find the Programmer. - Follow the instructions for installation.

-

Add Quartus programmer to your path:

export QUARTUS_DIR=<path to qprogrammer install> export PATH=$QUARTUS_DIR/qprogrammer/quartus/bin:$PATH -

Start the jtag daemon:

sudo killall jtagd sudo jtagd -

Run

jtagconfigfrom the Quartus install, you should see the device(s):$ jtagconfig 1) IA-840F [1-5.2] 0341B0DD AGFB027R25A(.|R0)or, for the NT400D11:

$ jtagconfig 1) USB-BlasterII [1-5] C34320DD AGFA014R24C(.|B|AA) -

Navigate to the directory containing the

jicfile:source setup.sh cd $VOLLO_SDK/bitstream -

Set the JTAG clock frequency of the device you want to program to 16 MHz. Specify the device by providing the name returned by

jtagconfig:jtagconfig --setparam "IA-840F [1-5.2]" JtagClock 16Mor, for the NT400D11:

jtagconfig --setparam "USB-BlasterII [1-5]" JtagClock 16M -

Start the programming operation on the chosen device. This takes around 20 minutes. For the IA840F:

quartus_pgm -c "IA-840F [1-5.2]" -m JTAG -o "ipv;vollo-ia840f-c3b64.jic"or, for the IA420F:

quartus_pgm -c "IA-420F [1-5.2]" -m JTAG -o "ipv;vollo-ia420f-c6b32.jic"or, for the NT400D11:

quartus_pgm -c "USB-BlasterII [1-5]" -m JTAG -o "ipv;vollo-nt400d1-c6b32.jic" -

Go back to 6 and program any other devices.

-

Power off the system and start it back up. The bitstream will now be loaded onto the FPGA.

For the configuration process to be triggered the board has to register the power being off. It is recommended to turn the power off and then wait a few seconds before turning the power back on to ensure this happens. -

Check a Vollo bitstream is loaded:

$ lspci -d 1ed9:766f 51:00.0 Processing accelerators: Myrtle.ai Device 766f (rev 01)Check the correct Vollo bitstream is loaded:

vollo-tool bitstream-check bitstream/<bitstream-name>.json

Programming the FPGA via PCIe

NOTE: this can only be done with an FPGA that is already programmed with a Vollo bitstream.

-

Load the kernel driver:

sudo ./load-kernel-driver.sh -

Check the current bitstream information:

source setup.sh vollo-tool bitstream-info -

Check that the device is set up for remote system updates by running the command below, with

device indexrepresenting the index of the device you want to update, in the order shown in the previous command, starting from 0. It should print ajsonstring to the terminal showing the device status.vollo-tool fpga-config rsu-status <device index> -

Update the

USER_IMAGEpartition of the flash with the new bitstream image contained in therpdarchive in the$VOLLO_SDK/bitstreamdirectory. This should take around 5 minutes. Do not interrupt this process until it completes.sudo ./load-kernel-driver.sh vollo-tool fpga-config overwrite-partition <device index> <.rpd.tar.gz file> USER_IMAGE -

Repeat step 4 for any other devices you wish to update.

-

Power off the system and start it back up.

For the configuration process to be triggered the board has to register the power being off. It is recommended to turn the power off and then wait a few seconds before turning the power back on to ensure this happens. -

Repeat steps 1, 2 and 3. The

bitstream-infocommand should show that the updated bitstream has been loaded (e.g. a newer release date), and the output of thersu-statuscommand should show all zeroes for theerror_codeandfailing_image_addressfields. -

Check the correct Vollo bitstream is loaded:

sudo ./load-kernel-driver.sh vollo-tool bitstream-check bitstream/<bitstream-name>.json

Programming the Silicom-fb4CGg3 FPGA

Programming the FPGA over PCIe

Unless something has gone wrong, you should always be able to program the FPGA over PCIe.

You can check if the device is programmed with a Myrtle.ai Vollo bitstream by running:

$ lspci -d 1ed9:

01:00.0 Processing accelerators: Myrtle.ai Device 000a

If the device has not been programmed with the Vollo bitstream then it likely has the factory image which is also suitable for programming over PCIe:

$ lspci -d 1c2c:

01:00.0 Ethernet controller: Silicom Denmark Device 0001

If the device is not enumerating at all please contact us.

The following instructions will program the Vollo bitstream over PCIe:

-

First load the kernel driver.

sudo ./load-kernel-driver.sh vfioThere may be compilation issues with your version of Linux. This has been checked with Rocky Linux 8.10. If there is an issue with your system, please contact us.

-

Once the kernel driver is loaded you can program the flash with

vollo-tool:sudo $VOLLO_SDK/bin/vollo-tool fpga-config overwrite-partition ${device_index:?} $VOLLO_SDK/bitstream/vollo-silicom-fb4CGg3@VU09P-3-c3b32.bit USER_IMAGEThe progress will be displayed and it should take around 5 minutes to program the flash. You will need to power cycle the host for the new bitstream to be loaded.

-

If successful the device should now enumerate as a Myrtle.ai Vollo device:

$ lspci -d 1ed9: 01:00.0 Processing accelerators: Myrtle.ai Device 000a

Programming the V80 FPGA

Download the bitstream for your FPGA

The bitstream is available on the Github Release page alongside the Vollo SDK. For example to

download the bitstream for the AMD v80 board with the c6b32 configuration of Vollo:

curl -LO https://github.com/MyrtleSoftware/vollo-sdk/releases/download/v26.0.2/vollo-amd-v80-c6b32-26.0.tar.gz

mkdir -p $VOLLO_SDK/bitstream

tar -xzf vollo-amd-v80-c6b32-26.0.tar.gz -C $VOLLO_SDK/bitstream

Alternatively, for the AMD v80-LL, use:

curl -LO https://github.com/MyrtleSoftware/vollo-sdk/releases/download/v26.0.2/vollo-amd-v80-ll-c6b32-26.0.tar.gz

mkdir -p $VOLLO_SDK/bitstream

tar -xzf vollo-amd-v80-ll-c6b32-26.0.tar.gz -C $VOLLO_SDK/bitstream

Programming the FPGA via JTAG

Programming a V80 board over JTAG is necessary if the board does not yet have a Vollo image loaded on it or if the device does not enumerate correctly. Programming over PCIe is preferred. If the board does notenumerate or there is some other issue with PCIe programming then JTAG programming is the only option.

This requires a USB cable to be connected to the accelerator card and Vivado to be installed on the system so that the device can be programmed over JTAG.

-

Download and install Vivado Lab Edition:

-

Navigate to the Vivado Design Tools download page.

-

Under "Vivado Lab Solutions" find "Vivado 2025.2: Lab Edition - Linux (TAR/GZIP - 1.99 GB)" (later versions may be available).

-

Download the file and extract it to a directory of your choice. You will need an AMD account to download the file. You can create an account for free.

-

Pick a location to install

Vivado_Lab, e.g./opt/Xilinx, a user directory like~/Xilinxis also fine:VIVADO_DIR=~/Xilinx mkdir -p $VIVADO_DIR -

Extract the tarball:

tar xf Vivado_Lab_Lin_2025.2_1114_2157.tar cd Vivado_Lab_Lin_2025.2_1114_2157.tar -

Run the installer:

./xsetup --agree 3rdPartyEULA,XilinxEULA --batch Install --edition "Vivado Lab Edition (Standalone)" --location $VIVADO_DIR -

Check that installation was successful:

$ $VIVADO_DIR/2025.2/Vivado_Lab//bin/vivado_lab -version Vivado Lab Edition v2025.2 (64-bit) SW Build 6299465 on Fri Nov 14 21:19:43 MST 2025 Tool Version Limit: 2025.11 Copyright 1986-2022 Xilinx, Inc. All Rights Reserved. Copyright 2022-2025 Advanced Micro Devices, Inc. All Rights Reserved.

-

-

Run the

program_v80_fpt.tclscript to program the V80 board:sudo $VIVADO_DIR/2025.2/Vivado_Lab/bin/vivado_lab -mode batch -source ./flash_vollo-amd-v80-c6b32.tclThis prints out a lot of lines while programming and takes about 10 minutes.

If you get an error like this:

ERROR: [Labtoolstcl 44-469] There is no current hw_target.Make sure that you ran

vivado_labwithsudoand that the USB cable is plugged in.After programming you must power cycle the host for the new bitstream to be loaded.

Sometimes a V80 host machine will hang on boot. You may need to force another power cycle of the host to bring it back. Occasionally a power cycle isn't enough and you may need to turn the power off for several minutes before turning it back on. -

If successful the device should now enumerate as a Myrtle.ai Vollo device:

$ lspci -d 1ed9: 01:00.0 Processing accelerators: Myrtle.ai Device 100a 01:00.1 Processing accelerators: Myrtle.ai Device 000a

Programming the FPGA over PCIe

If your FPGA is already programmed with the Vollo accelerator then you can update the bitstream over PCIe. You can check if the device is programmed with the Myrtle.ai Vollo bitstream by running:

$ lspci -d 1ed9:

01:00.0 Processing accelerators: Myrtle.ai Device 100a

01:00.1 Processing accelerators: Myrtle.ai Device 000a

If the device has not been programmed with the vollo bitstream then you will need to program the board over JTAG. See Programming the FPGA via JTAG below.

Programming over PCIe is the preferred method of programming the board as it is faster than programming over JTAG, and does not require a USB programming cable or for Vivado to be installed.

-

Build and insert the ami driver.

cd ami_kernel_driver make sudo insmod ami.koThere may be compilation issues with your version of Linux. This has been checked with Rocky Linux 8.10 and Ubuntu 22.04. If there is an issue with your system, please contact us.

-

Once the kernel driver is loaded you can program the flash with

vollo-tool(which usesami_tool). If you only have one board,device_indexis0.sudo $VOLLO_SDK/bin/vollo-tool fpga-config overwrite-partition ${device_index:?} $VOLLO_SDK/bitstream/vollo-amd-v80-c6b32.pdi USER_IMAGEThere will be a progress bar and it should take around 5 minutes to program the flash. You will need to power cycle the host for the new bitstream to be loaded.

Sometimes a V80 host machine will hang on boot. You may need to force another power cycle of the host to bring it back. Occasionally a power cycle isn't enough and you may need to turn the power off for several minutes before turning it back on. -

If successful the device should now enumerate as a Myrtle.ai Vollo device:

$ lspci -d 1ed9: 01:00.0 Processing accelerators: Myrtle.ai Device 100a 01:00.1 Processing accelerators: Myrtle.ai Device 000a

Licensing

Vollo is licensed on a per-device basis.

Redeeming licenses with vollo-tool

You will receive a purchase-token with your Vollo purchase. The purchase-token can be used to redeem Vollo licenses for a set number of devices.

To see the number of credits (i.e. the number of devices which can be redeemed) on your purchase-token, run:

source setup.sh

vollo-tool license num-remaining-devices -t <purchase-token>

To redeem devices on your purchase token:

-

Load the kernel driver if you haven't already done so:

sudo ./load-kernel-driver.sh -

Run

vollo-tool device-ids. This will enumerate all Vollo accelerators and output their device IDs.vollo-tool device-ids | tee vollo.devices -

Run

vollo-tool license redeem-device, passing the device IDs you wish to generate licenses for. This will print a breakdown of which devices will consume credits on thepurchase-token.vollo-tool license redeem-device -t <purchase-token> --device-ids <device IDs>Alternatively you can pass the

vollo.devicesoutput from the previous step if you wish to redeem licenses for all devices.vollo-tool license redeem-device -t <purchase-token> --device-id-file <device ID file> -

When you have confirmed which devices will consume credits on the

purchase-token, runvollo-tool license redeem-device --consume-creditsto generate the licenses. The licenses will be printed tostdout.vollo-tool license redeem-device -t <purchase-token> --device-ids <device IDs> --consume-credits | tee vollo.lic

The licenses redeemed on a purchase token can be viewed at any time by running vollo-tool license view-licenses:

vollo-tool license view-licenses -t <purchase-token> | tee vollo.lic

Installing a license

-

The license file location should be set in the environment variable

MYRTLE_LICENSE.export MYRTLE_LICENSE=<license file> -

Check that the license for your device(s) is being recognised.

vollo-tool license-checkIf successful, the output should look like this:

Ok: found 2 devices with valid licenses

Running an example

The Vollo SDK contains a trivial program for each accelerator to check if the accelerator is working.

-

Ensure you have run the setup steps:

cd <vollo-sdk> sudo ./load-kernel-driver.sh source setup.sh export MYRTLE_LICENSE=<your-license-file> -

Compile the C runtime example:

(cd example; make) -

Run the example.

For a block-size 64 accelerator such as

vollo-ia840f-c3b64orvollo-ia840f-c2b64d:./example/vollo-example example/identity_b64.volloFor a block-size 32 accelerator such as

vollo-ia420f-c6b32orvollo-v80-c6b32:./example/vollo-example example/identity_b32.volloYou should see an output similar to the following:

Using program: "example/identity_b64.vollo" Using vollo-rt version: 18.0.0 Using Vollo accelerator with 3 core(s) and block_size 64 Program metadata for model 0: 1 input with shape: [128] 1 output with shape: [128] Starting 10000 inferences Done Ran 10000 inferences in 0.020185 s with: mean latency of 2.004259 us 99% latency of 2.176000 us throughput of 495411.228723 inf/s

Running the benchmark

The release comes with a benchmark script that can be used to measure the performance of the accelerator for a variety of models. The script uses the vollo compiler to compile the models for your accelerator and then runs the models on the accelerator to measure the performance.

-

Install the script dependencies:

sudo apt install python3-venv jqNote, the compiler requires python 3.7 or later.

-

Ensure you have run the setup steps:

cd <vollo-sdk> sudo ./load-kernel-driver.sh source setup.sh export MYRTLE_LICENSE=<your-license-file> -

Run the benchmark:

$VOLLO_SDK/example/benchmark.sh -

You can cross reference your numbers with those in the benchmarks section of the documentation.

Vollo Runtime

The Vollo runtime provides a low latency asynchronous inference API for timing critical inference requests on the Vollo accelerator.

A couple of example C programs that use the Vollo runtime API have been included in the

installation in the example/ directory.

In order to use the Vollo runtime you need to have an accelerator set up:

- Programmed Intel Agilex, Silicom fb4CGg3 or AMD V80 accelerator

- A loaded kernel driver and an installed license

- Environment set up with

source setup.sh

Python API

The Vollo SDK includes Python bindings for the Vollo runtime. These can be more convenient than the C API for e.g. testing Vollo against PyTorch models.

The API for the Python bindings can be found here.

A small example of using the Python bindings is provided here.

C API

The Vollo runtime API is a C API with simple types and functions in order to be straight forward to use from any language with a C FFI.

- Header file:

$VOLLO_SDK/include/vollo-rt.h - Dynamic library:

$VOLLO_SDK/lib/libvollo_rt.so - Static library:

$VOLLO_SDK/lib/libvollo_rt.a

It was built against GLIBC version 2.17.

To compile against Vollo RT with a standard C compiler, you can use the following flags:

-I $VOLLO_SDK/include -L $VOLLO_SDK/lib -lvollo_rt

These are the main steps (in order) a program using vollo_rt will follow:

- Initialise the Vollo runtime using

vollo_rt_init - Add Vollo accelerators to the runtime using

vollo_rt_add_accelerator(Note: the current release only supports one accelerator) - Load a Vollo program onto the Vollo accelerators with

vollo_rt_load_program - Optionally, inspect the metadata about the models in the program using API calls such as

vollo_rt_num_modelsandvollo_rt_model_num_inputs - Queue and run inference jobs by first calling

vollo_rt_add_job_bf16(orvollo_rt_add_job_fp32) and then polling in a loop for their completion usingvollo_rt_poll. You can queue several jobs before callingvollo_rt_pollor add extra jobs at any point. - Finally call

vollo_rt_destroyto release resources.

The API is designed to explicitly return errors when it can to let the user handle them as they see fit. The metadata functions will instead error out themselves if any of the documented pre-conditions they rely on aren't met. Any other crash is considered a bug and we would be very grateful if you could tell us about it.

Initialisation

A vollo context is created by calling vollo_rt_init.

Add an accelerator by using the vollo_rt_add_accelerator function.

/**

* Initialise the vollo-rt context. This must be called before any other vollo-rt functions.

*

* Logging level can be configured by setting the environment variable `VOLLO_RT_LOG` to one of:

* "error", "warn", "info", "debug", or "trace"

*/

vollo_rt_error_t vollo_rt_init(vollo_rt_context_t* context_ptr);

/**

* Destroy vollo-rt context, releasing its associated resources.

*/

void vollo_rt_destroy(vollo_rt_context_t vollo);

/**

* Add an accelerator.

* The accelerator is specified by its index. The index refers to an accelerator in the sorted list

* of PCI addresses. This should be called after `vollo_rt_init` but before `vollo_rt_load_program`

*/

vollo_rt_error_t vollo_rt_add_accelerator(vollo_rt_context_t vollo, size_t accelerator_index);

Loading a program

A program is loaded onto the Vollo accelerator using the vollo_rt_load_program function.

/**

* Load a program onto the Vollo accelerators.

* This should be called after `vollo_rt_add_accelerator`

*

* A Vollo program is generated by the Vollo compiler, it is typically named

* "<program_name>.vollo".

* The program is intended for a specific hardware config (number of accelerators,

* cores and other configuration options), this function will return an

* error if any accelerator configuration is incompatible with the program.

* Once loaded, the program provides inference for several models concurrently.

*

* Note: This should only be called once per `vollo_rt_context_t`, as such if

* a program needs to be changed or reset, first `vollo_rt_destroy` the current

* context, then start a new context with `vollo_rt_init`.

*/

vollo_rt_error_t vollo_rt_load_program(vollo_rt_context_t vollo, const char* program_path);

Model metadata

Once a program is loaded, it provides inference for one or more models. Metadata about

a model is obtained with vollo_rt_model_* functions.

Each model can have multiple distinct inputs and outputs. Each input and each output has a multi-dimensional shape associated with it. All of the metadata is defined by the program as supplied by the Vollo compiler. All the shapes are statically defined.

Some models can be compiled as streaming statefully over a dimension, that dimension is then erased from the inference shape but its possition can be recovered in the model metadata.

/**

* Inspect the number of models in the program loaded onto the vollo.

*

* Programs can contain multiple models, a `model_index` is used to select a

* specific model

*/

size_t vollo_rt_num_models(vollo_rt_context_t vollo);

/**

* Get the number of inputs of a model

*

* Each input has its own distinct shape

*

* Requirements (panics otherwise):

* - a program was loaded with `vollo_rt_load_program`

* - `model_index < vollo_rt_num_models`

*/

size_t vollo_rt_model_num_inputs(vollo_rt_context_t vollo, size_t model_index);

/**

* Get the number of outputs of a model

*

* Each output has its own distinct shape

*

* Requirements (panics otherwise):

* - a program was loaded with `vollo_rt_load_program`

* - `model_index < vollo_rt_num_models`

*/

size_t vollo_rt_model_num_outputs(vollo_rt_context_t vollo, size_t model_index);

/**

* Get the shape for input at a given index

*

* The return value is an array of dims containing the input shape

* Use `vollo_rt_model_input_shape_len` to get the number of axes in the shape.

*

* For backwards compatibility the array is also 0-terminated, but that should not be relied upon

* in order to correctly support shapes containing a 0 dimension

*

* The value lives for as long as the model

*

* Requirements (panics otherwise):

* - a program was loaded with `vollo_rt_load_program`

* - `model_index < vollo_rt_num_models`

* - `input_index < vollo_rt_model_num_inputs`

*/

const size_t* vollo_rt_model_input_shape(

vollo_rt_context_t vollo, size_t model_index, size_t input_index);

/**

* Get the number of axes in the shape for the input at a given index

*

* Requirements (panics otherwise):

* - a program was loaded with `vollo_rt_load_program`

* - `model_index < vollo_rt_num_models`

* - `input_index < vollo_rt_model_num_inputs`

*/

size_t vollo_rt_model_input_shape_len(

vollo_rt_context_t vollo, size_t model_index, size_t input_index);

/**

* Get the shape for output at a given index

*

* The return value is an array of dims containing the output shape

* Use `vollo_rt_model_output_shape_len` to get the number of axes in the shape.

*

* For backwards compatibility the array is also 0-terminated, but that should not be relied upon

* in order to correctly support shapes containing a 0 dimension

*

* The value lives for as long as the model

*

* Requirements (panics otherwise):

* - a program was loaded with `vollo_rt_load_program`

* - `model_index < vollo_rt_num_models`

* - `output_index < vollo_rt_model_num_outputs`

*/

const size_t* vollo_rt_model_output_shape(

vollo_rt_context_t vollo, size_t model_index, size_t output_index);

/**

* Get the number of axes in the shape for the output at a given index

*

* Requirements (panics otherwise):

* - a program was loaded with `vollo_rt_load_program`

* - `model_index < vollo_rt_num_models`

* - `output_index < vollo_rt_model_num_outputs`

*/

size_t vollo_rt_model_output_shape_len(

vollo_rt_context_t vollo, size_t model_index, size_t output_index);

/**

* Get the number of elements for input at a given index

*

* This is simply the product of the dimensions returned by `vollo_rt_model_input_shape`,

* it is provided to make it easier to allocate the correct number of elements.

*

* Requirements (panics otherwise):

* - a program was loaded with `vollo_rt_load_program`

* - `model_index < vollo_rt_num_models`

* - `input_index < vollo_rt_model_num_inputs`

*/

size_t vollo_rt_model_input_num_elements(

vollo_rt_context_t vollo, size_t model_index, size_t input_index);

/**

* Get the number of elements for output at a given index

*

* This is simply the product of the dimensions returned by `vollo_rt_model_output_shape`,

* it is provided to make it easier to allocate the correct number of elements.

*

* Requirements (panics otherwise):

* - a program was loaded with `vollo_rt_load_program`

* - `model_index < vollo_rt_num_models`

* - `output_index < vollo_rt_model_num_outputs`

*/

size_t vollo_rt_model_output_num_elements(

vollo_rt_context_t vollo, size_t model_index, size_t output_index);

/**

* In a streaming model, the streaming dimension is not part of the shape.

*

* - It returns -1 when there is no streaming dimension

* - It otherwise returns the dim index

* For example, for a shape `(a, b, c)` and streaming dim index 1, the full shape is:

* `(a, streaming_dim, b, c)`

*

* Requirements (panics otherwise):

* - a program was loaded with `vollo_rt_load_program`

* - `model_index < vollo_rt_num_models`

* - `input_index < vollo_rt_model_num_inputs`

*/

int vollo_rt_model_input_streaming_dim(

vollo_rt_context_t vollo, size_t model_index, size_t input_index);

/**

* In a streaming model, the streaming dimension is not part of the shape.

*

* - It returns -1 when there is no streaming dimension

* - It otherwise returns the dim index

* For example, for a shape `(a, b, c)` and streaming dim index 1, the full shape is:

* `(a, streaming_dim, b, c)`

*

* Requirements (panics otherwise):

* - a program was loaded with `vollo_rt_load_program`

* - `model_index < vollo_rt_num_models`

* - `output_index < vollo_rt_model_num_outputs`

*/

int vollo_rt_model_output_streaming_dim(

vollo_rt_context_t vollo, size_t model_index, size_t output_index);

Running inference

The interface returns results asynchronously so that inference requests can be made as fast

as the system can support, without blocking on output data being returned. This way, it also

supports running multiple requests concurrently.

Before any compute is started a job with associated input and output buffers needs to be

registered with the runtime using one of vollo_rt_add_job_bf16 or vollo_rt_add_job_fp32.

The bf16 variant uses bfloat16

which is effectively a cropped version of single precision floating point format

fp32 (same exponent, smaller mantissa).

Note: do NOT use C floating point literals for bf16 as it is simply a uint16_t in the API

A fp32 variant is also provided despite the Vollo accelerator expecting its

inputs and outputs to be in fp16. If you are working with fp32, prefer

this version instead of the bf16 variant as it is able to make the conversion

while copying to/from DMA buffers, avoiding an extra copy.

/**

* Sets up a computation on the vollo accelerator where the inputs and outputs are in brain-float 16

* format.

*

* Note: The computation is only started on the next call to vollo_rt_poll. This way it is possible

* to set up several computations that are kicked off at the same time.

*

* - vollo:

* the context that the computation should be run on

* - model_index:

* the model to run

* - user_ctx:

* a user context that will be returned on completion. This can be used to disambiguate when

* multiple models are running concurrently.

* NOTE: the jobs for a single model are guaranteed to come back in order, but the jobs for

* different models are not.

* - input_data:

* a pointer to the start of an array with pointers to the start of the data to each input the

* number of inputs is given by `vollo_rt_model_num_inputs` each input length is the product of

* the shape given by `vollo_rt_model_input_shape`

* (or more convenient: `vollo_rt_model_input_num_elements`)

* lifetime:

* - The outer array only needs to live until `vollo_rt_add_job_bf16` returns

* - The input buffers need to live until `vollo_rt_poll` returns with the completion for

* this job

* - output_data:

* a pointer to the start of an array with pointers to the start of the data to each output

* buffer the number of outputs is given by `vollo_rt_model_num_outputs` each output length is

* the product of the shape given by `vollo_rt_model_output_shape`

* (or more convenient: `vollo_rt_model_output_num_elements`)

* lifetime:

* - The outer array only needs to live until `vollo_rt_add_job_bf16` returns

* - The output buffers need to live until `vollo_rt_poll` returns with the completion for

* this job

*/

vollo_rt_error_t vollo_rt_add_job_bf16(

vollo_rt_context_t vollo,

size_t model_index,

uint64_t user_ctx,

const bf16* const* input_data,

bf16* const* output_data);

vollo_rt_error_t vollo_rt_add_job_fp32(

vollo_rt_context_t vollo,

size_t model_index,

uint64_t user_ctx,

const float* const* input_data,

float* const* output_data);

To actually start and later complete an inference you must use the vollo_rt_poll function

multiple times. It is typically called in a loop (with a timeout) until some or all of the jobs

are completed.

/**

* Poll the vollo accelerator for completion.

*